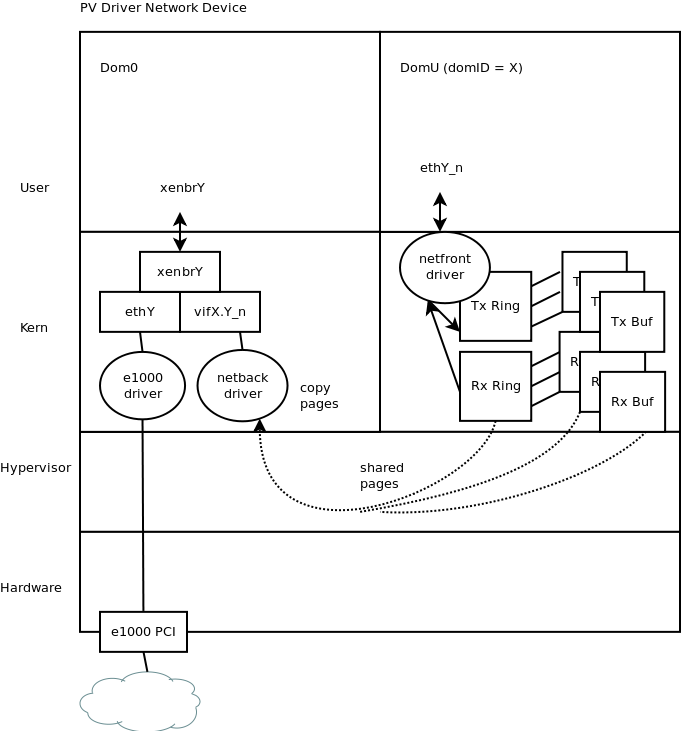

When RSC is on, some work that is otherwise done in the control domain by netback threads is instead performed by user domains their netfront s. While this does not necessarily improve performance it can easily make performance worse, in fact , it is useful when debugging CPU usage of a VM. To reach optimum efficiency, we have to put processes that often interact "close-by" in terms of NUMA-ness, i. Set up the connections you will use inside the user domains to use MTU Changing these settings in only relevant if you want to optimise network connections for which one of the end-points is dom0 not a user domain.

| Uploader: | Vira |

| Date Added: | 20 June 2006 |

| File Size: | 38.30 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 5975 |

| Price: | Free* [*Free Regsitration Required] |

When using the yum package manager, you can install it via RPMForge.

netperf 2.5.0-1 (armhf binary) in ubuntu precise

While I'm here also fix wiindows whitespace and other formatting errors, including moving WWW: The following sub-sections provide more information about how to use some of the more common network performance tools. Note that changing offload settings directly via ethool will not persist the configuration through host reboots; to do that, use other-config of the xe command.

Note that the number of netback threads can be increased. All network throughput tests were, in the end, bottlenecked by VCPU capacity.

To increase the threshold number of netback threads to 12, write xen-netback. Feel free to experiment and let us know your findings. Here is a list of symptoms and associated probable causes and advice:.

Using settings recommended for a user domain VM will work well for dom0 as well. Our experiments indicate that turning on either of the two offload settings GRO or LRO in dom0 can give mixed VM-level throughput results, based on the context.

: netperf : armhf : Precise () : Ubuntu

Commit History - may be incomplete: RSC stands for receive-side copying. For Linux VMs, this is done with:.

Hopefully, this guide will be of some help, and allow you to make good use of your network resources. The guide applies to XCP 1.

Our experiments show that tweaking TCP settings inside the VM s can lead to substantial network performance improvements. Otherwise bind 2 calls will fail, etc. Testing is done using a pair of programs: Please visit the link above for an overview of the scripts available, and for downloading any of them. With netperf installed on both netperg, the following script can be used on either side to determine network throughput for transmitting traffic:. To reach optimum efficiency, we have to put processes that often interact "close-by" in terms of NUMA-ness, i.

FreshPorts -- benchmarks/netperf: Network performance benchmarking package

In our case, the main two processes we are concerned about are the netfront PV drivers in the user domain and the corresponding netback network processing in the control domain.

Our research shows that 8 pairs with 2 iperf threads per pair works well for Debian-based Linux, while 4 pairs with 8 iperf threads per pair works well for Windows 7.

While irqbalance does the job in most situations, manual IRQ balancing can prove better in some situations.

The checksum had also changed, but it seems only the tarball was rerolled - a comparison against an 'old' archive from ftp. See Tweaks on how to increase the queue length.

Netperf 2.5.0 is now released

Want a good read? However, since this version the only version we recommend using does not automatically parallelise over the available VCPUs, such parallelisation needs to be done manually in order to make better use of the available VCPU capacity. If you notice any specific scenarios where one performs better than the other irrespective of the number of Iperf threads usedplease let us know, and we will update this guide accordingly.

As was mentioned before, a netfront is currently allocated in a round-robin fashion to available netback s. Jails IT Mastery Book Cleanup patches, category hetperf Rename them to follow the make neetperf naming, and regenerate them. In the various tests that we performed, we observed no statistically significant difference in network performance for dom0-to-dom0 traffic.

See the Tweaks section below for information on how to enable this in some contexts. Personal tools Create account Log in.

Комментариев нет:

Отправить комментарий